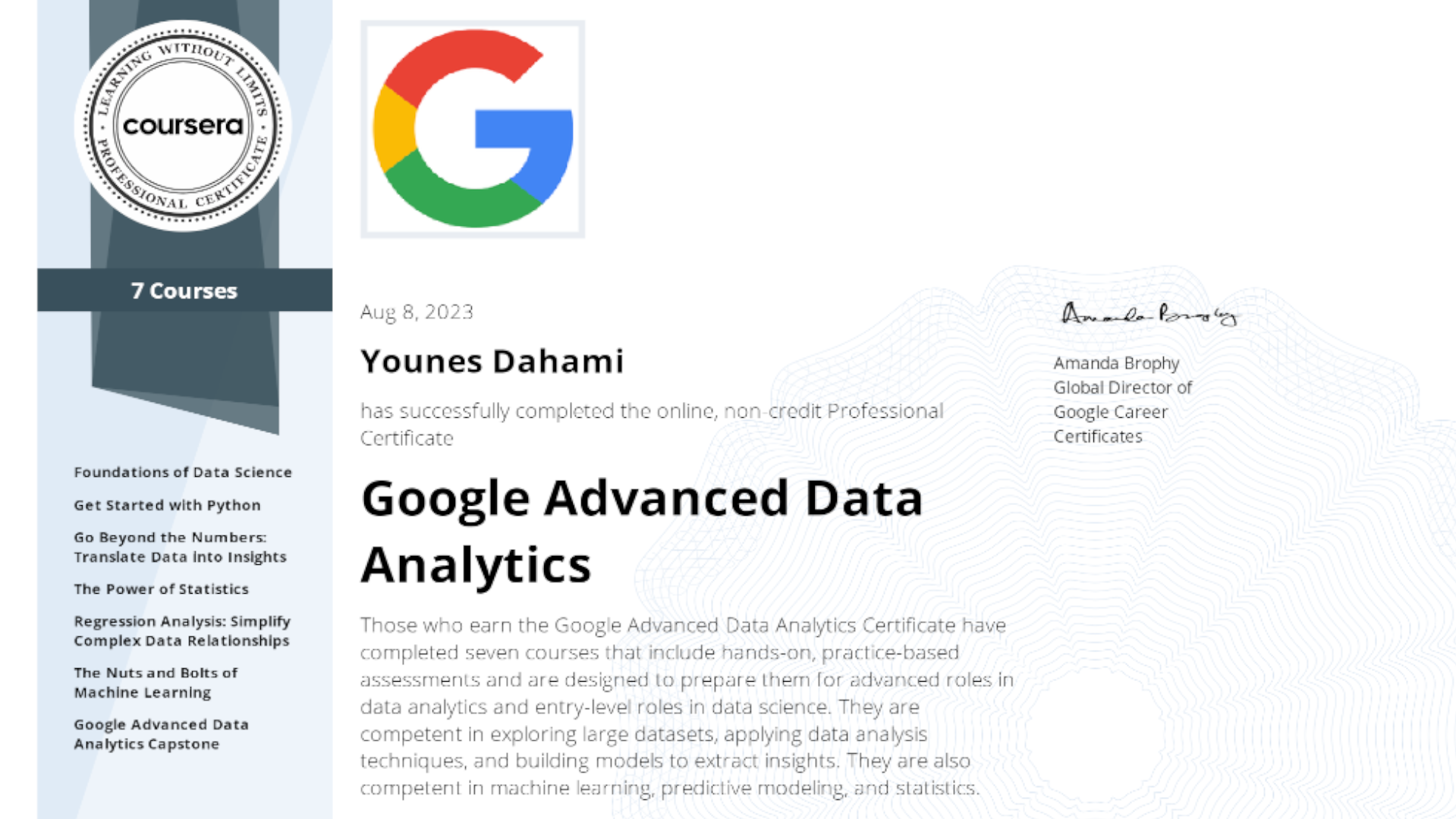

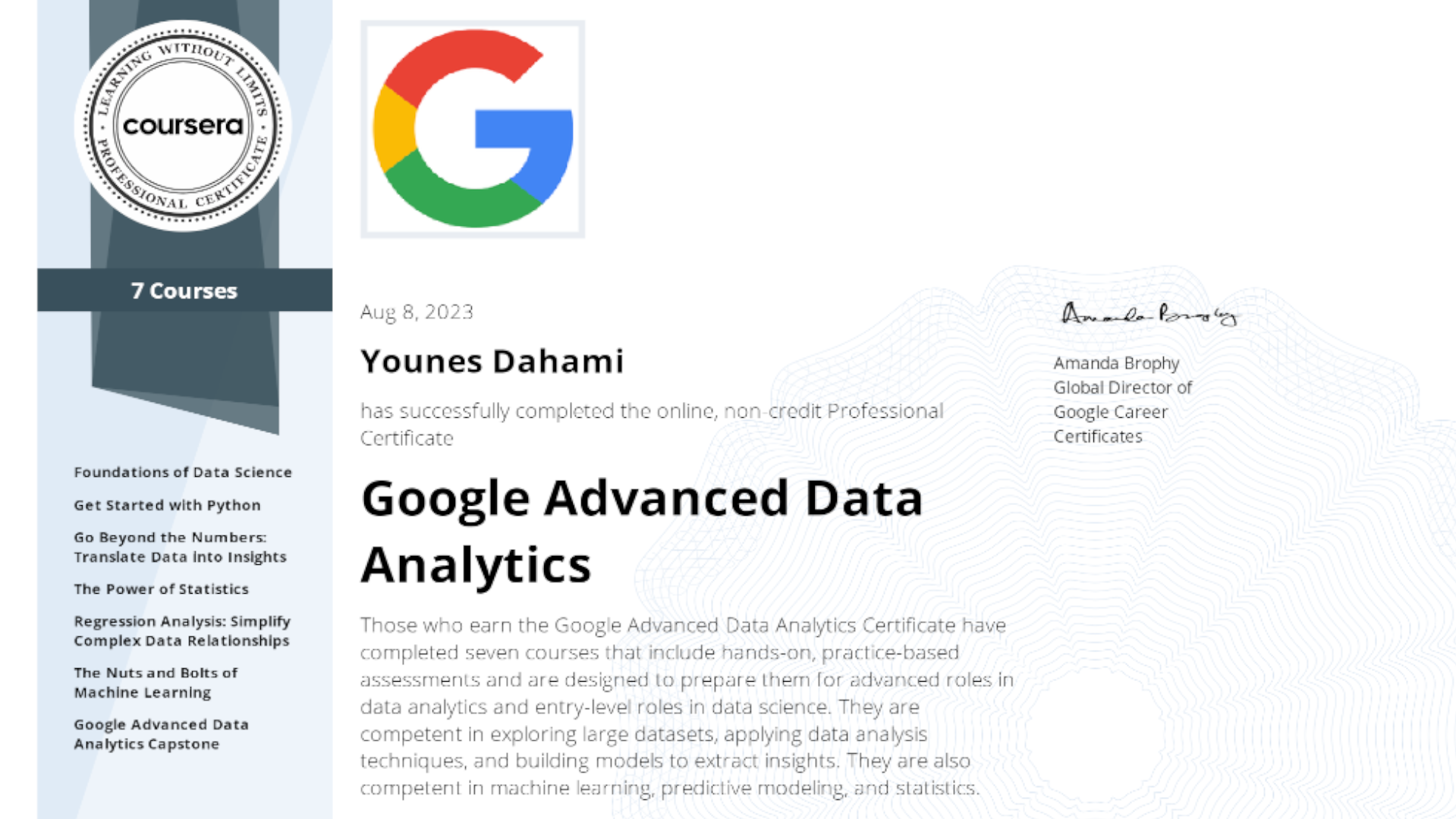

Google Advanced Data Analytics Professional Certificate

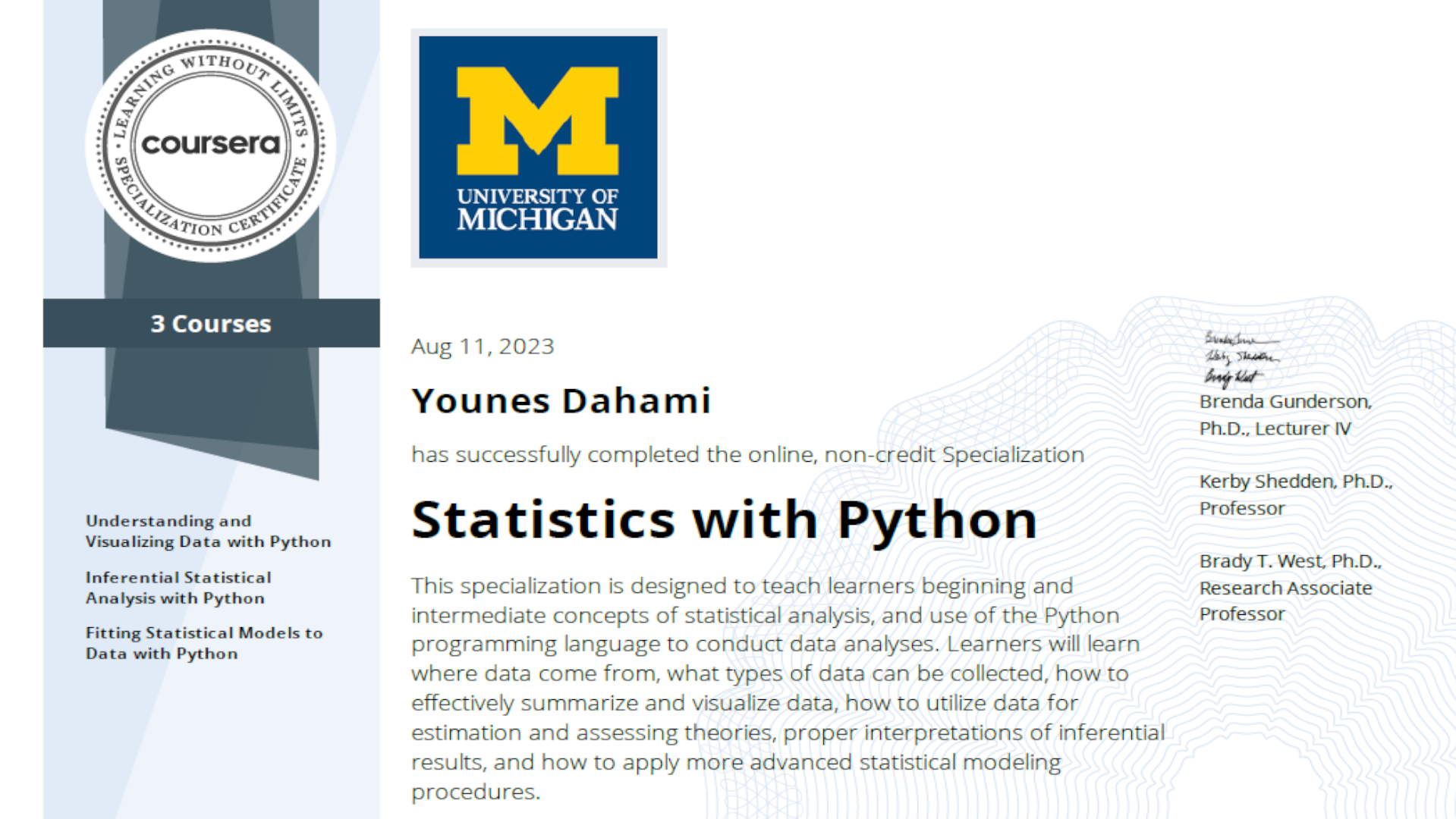

Stochastic Modeling and Statistics

Skills : Python, R, SQL, SAS, SPSS and Tableau.

@Dahami

In this project, we constructed a Generatively Pretrained Transformer (GPT), a Decoder-Only Transformer, inspired by the paper 'Attention is All You Need' and OpenAI's GPT-2 / GPT-3 models.

.jpg)

"makemore" takes one text file as input, where each line is assumed to be one training thing, and generates more examples similar to those provided. Under the hood, it is an autoregressive character-level language model, with a wide choice of models from bigrams all the way to a Transformer (exactly as seen in GPT). For instance, if we provide it with a database of names, makemore can generate unique and creative baby name suggestions that sound name-like but aren't already existing names. Similarly, if we input a list of company names, it can generate new ideas for company names. Alternatively, by supplying it with valid Scrabble words, we can generate English-like text.

This project serves as our gateway to mastering the art of extracting vital information from vast amounts of data. I delve into top-notch libraries, and simplify the entire process, step by step. By the end of our journey, we'll be equipped to condense lengthy articles, research papers, and documents into concise, easily digestible gems.

In this project we created and used a neural network to classify articles of clothing.

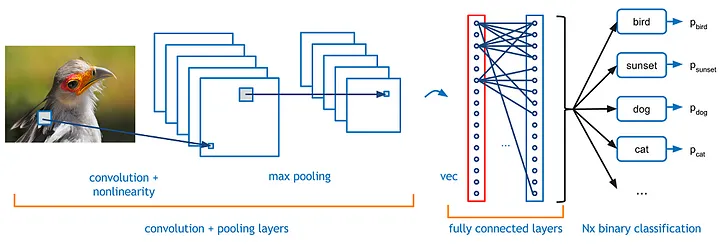

In this project we peformed image classification and object detection/recognition using deep computer vision with a convolutional neural network (CNN). The goal of our convolutional neural networks (CNNs) will be to classify and detect images or specific objects from within the image..

In this project we used various predictive models to see how accurate they are in detecting whether a transaction is a normal payment or a fraud.

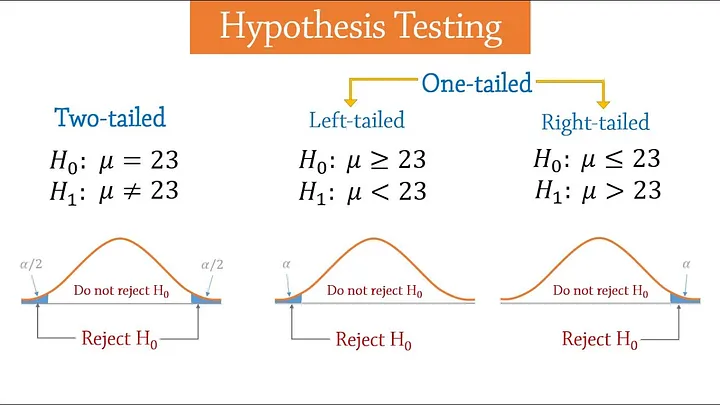

We demonstrate formal hypothesis testing using the NHANES data.

This project employs TensorFlow to predict stock prices across multiple companies using deep learning techniques. By leveraging TensorFlow's robust capabilities, we implement a sophisticated model capable of analyzing stock market data and making accurate predictions.

SpaceX advertises Falcon 9 rocket launches on its website with a cost of 62 million dollars, other providers cost upward of 165 million dollars each, much of the savings is because SpaceX can reuse the first stage. In this project, we will create a machine learning pipeline to predict if the first stage will land given the data from the preceding labs.

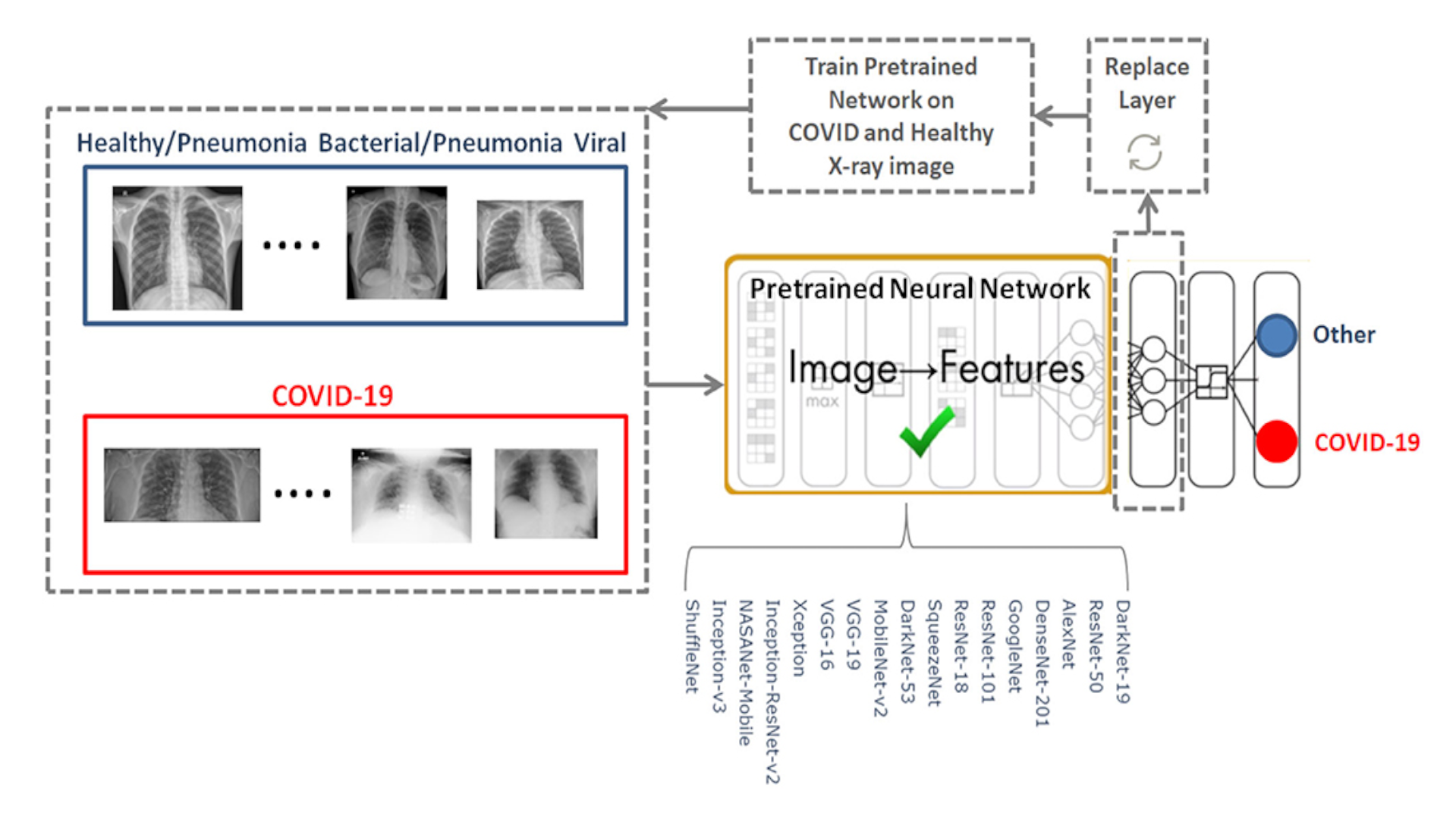

I developed a deep learning model to detect COVID-19 from chest X-ray images using the ResNet-50 architecture. The project involved collecting and preprocessing a dataset of X-ray images, fine-tuning the pre-trained ResNet-50 model, and optimizing its performance through training and validation. The model achieved high accuracy, demonstrating significant potential in aiding medical diagnostics for COVID-19

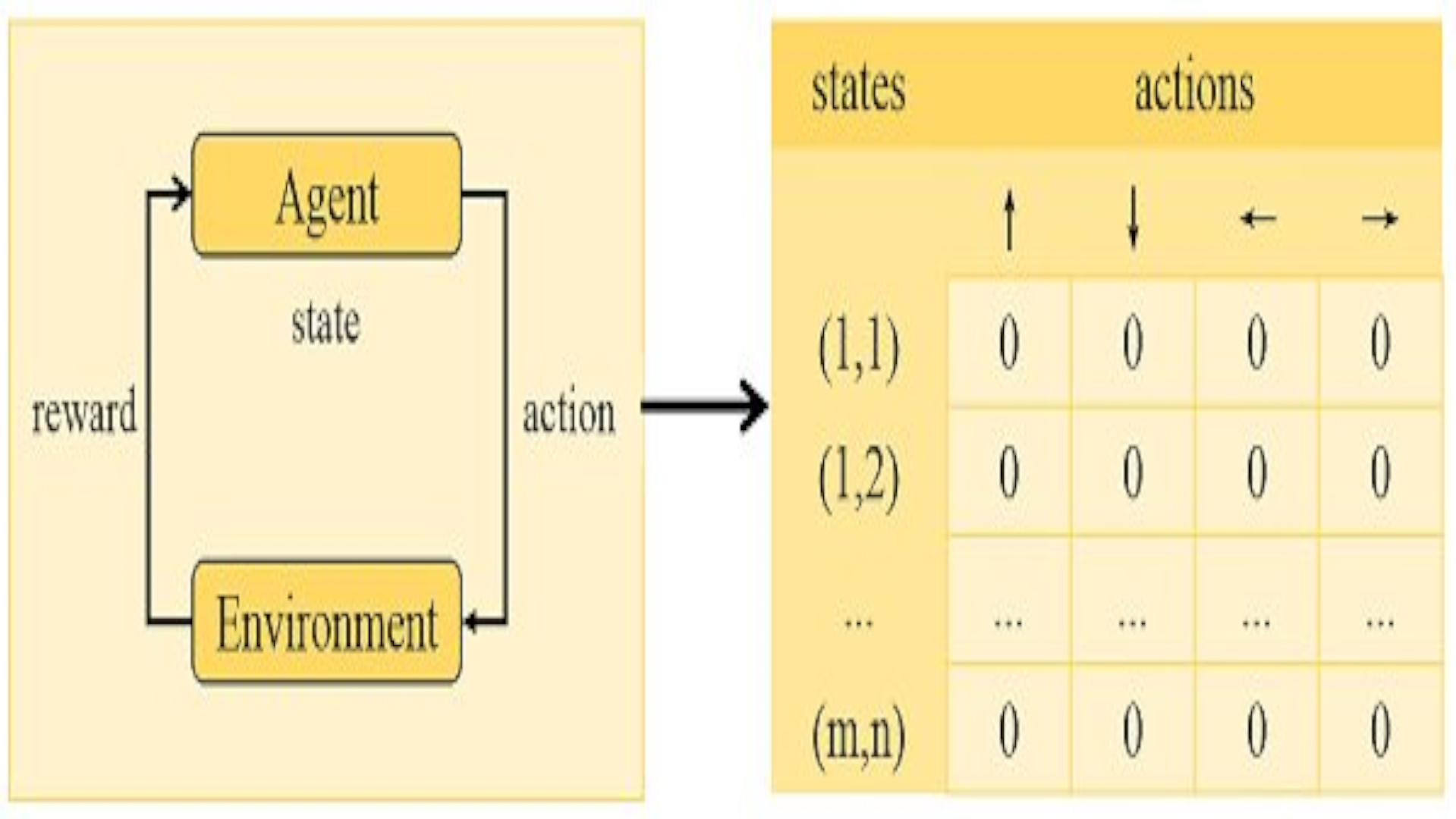

Q-learning is a reinforcement learning algorithm to learn the value of an action in a particular state.

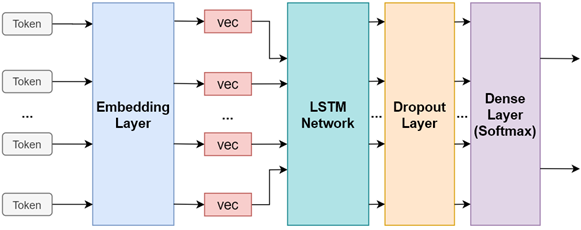

In this project, my goal is to explore and understand the process of classifying email as spam or legitimate. This is a classic binary classification problem. There are real-world problem can be solved by this method such as by detecting unsocilicited and unwanted emails, we can't prevent harmful and spam message from anonymous users (such as in gmail).

Predicting the price of cryptocurrencies is a popular case study in the data science community. The prices of stocks and cryptocurrencies are influenced by more than just the number of buyers and sellers. Nowadays, changes in financial policies by governments regarding cryptocurrencies also impact their prices.

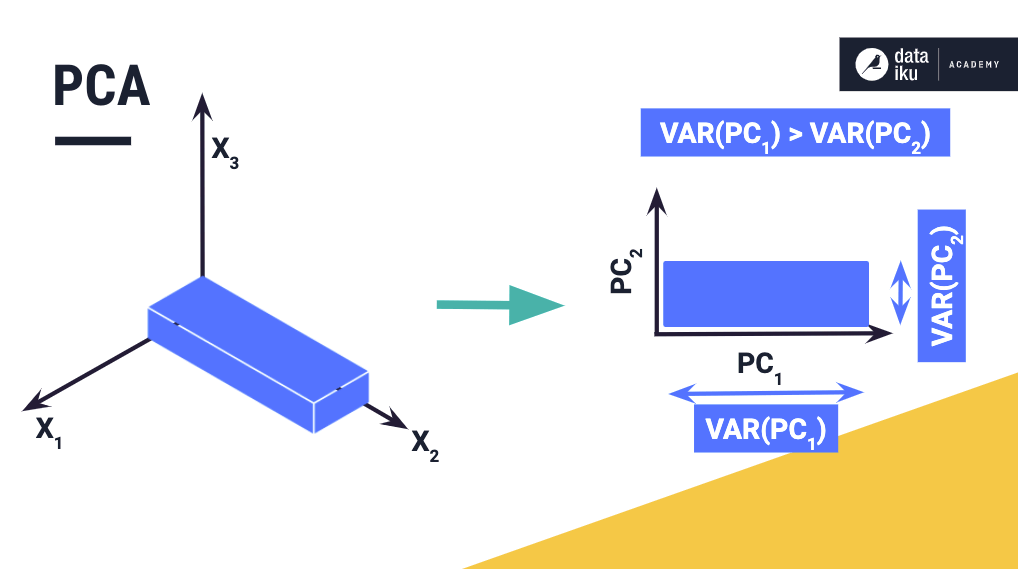

Principal Component Analysis (PCA) is a powerful dimensionality reduction technique widely used in various fields to identify patterns and reduce the number of features in a dataset while retaining essential information. My project delves into explaining and implementing PCA, showcasing its applications in machine learning, and image processing.

In this activity, We will complete an effective bionomial logistic regression. This exercise will help us better understand the value of using logistic regression to make predictions for a dependent variable based on one independent variable.

In this project we look at what variables affect the gross revenue from movies.

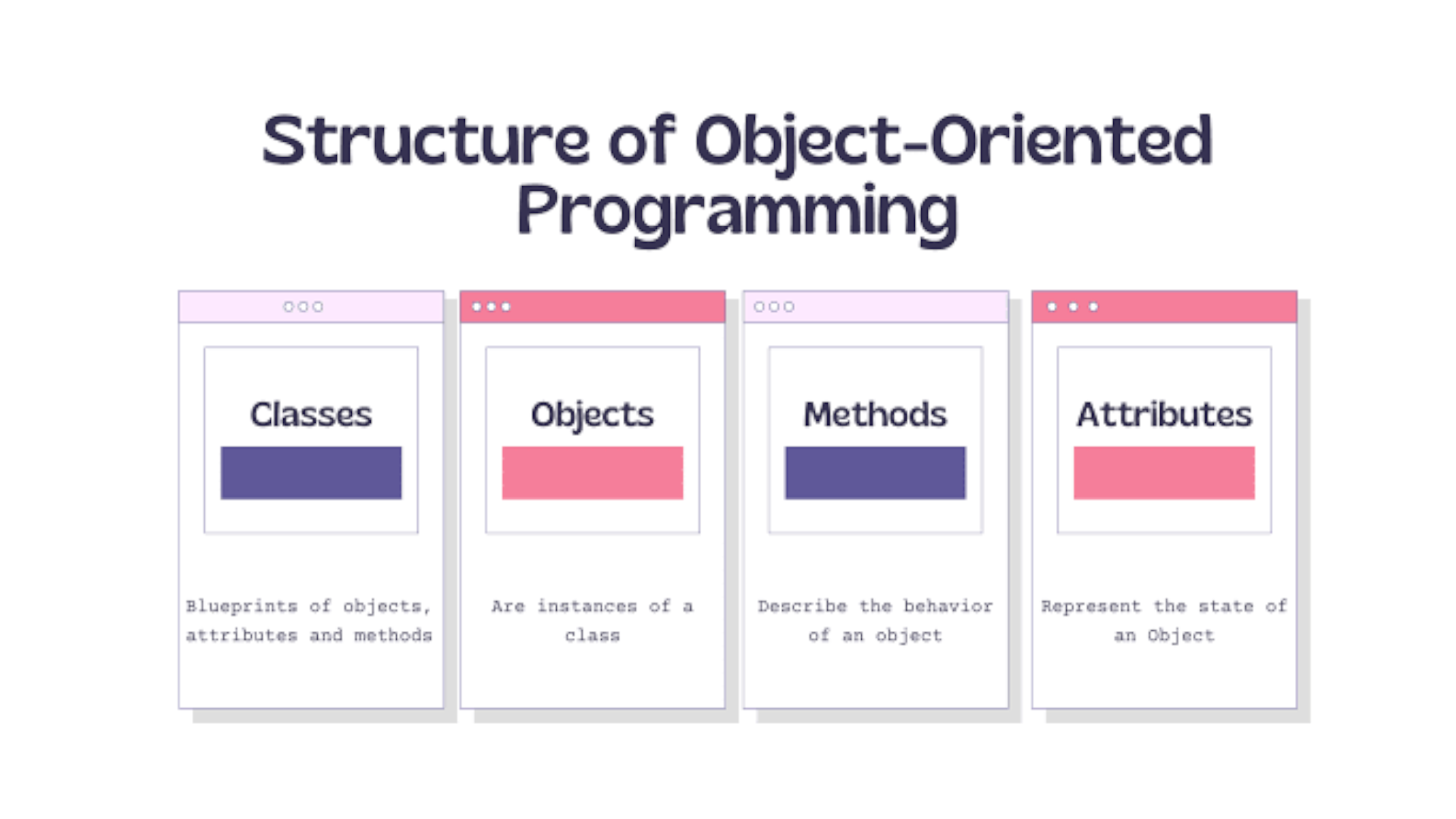

Object-oriented programming (OOP) is a paradigm that developers use to organize software applications into reusable components and blueprints through objects. OOP emphasizes the objects to be manipulated rather than the logic needed to manipulate them. This programming approach is ideal for large, complex, and actively maintained or updated programs.